Algorithms and Data Structure: Introduction

Published:

An algorithm refers to a sequence of instructions designed to solve a particular problem, whereas a data structure refers to a way of organizing and storing data in a computer to ensure efficient access and modification. Programming involves implementing algorithms and data structures, and their utilization greatly impacts system performance.

Measuring Algorithm Performance

- Method

- Experimental (Machine-Dependent): measure the execution time of a program

- Analytical (Machine-Independent): measure the number of steps of execution

- Evaluation metric of analyitical approach: Complexity

- Space complexity: Required memory size (static+dynamic)

- Static: predetermined size by a program

- Dynamic: varies depending on the number of input data

- Time complexity: The number of steps of execution. We care about both the average and the worst (longest).

- Both complexities are functions of the number of input data sizes, up to the leading order: Big O expression,

O(n)

- Space complexity: Required memory size (static+dynamic)

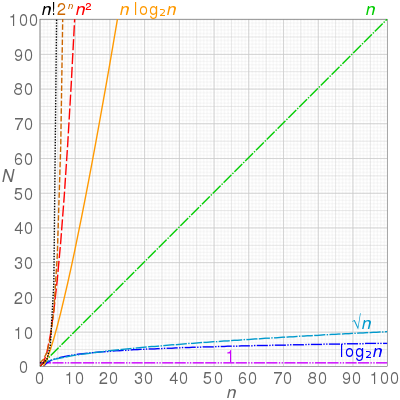

Big O Notation

The “O” in Big O notation is used to describe the upper bound of an algorithm’s running time. Big O notation only provides an upper bound on an algorithm’s running time, and actual running times may be faster in practice.

O(1): Constant.O(log n): Logarithmic growth.O(n): Linear growth.O(n log n): Log-linear or superlinear growth.O(n^2): Quadratic growth.O(n^3): Cubic growth.O(2^n): Exponential growth.O(n!): Factorial growth.

Big-Ω (Big-Omega) notation

Big-Omega refers to the lower bound of time or space complexity of an algorithm, meaning it is the best runtime scenario. Or runtime could worse than Big-Omega, but it will never be better.

Big-θ (Big-Theta) notation

Big-Theta refers to the tight bound of time or space complexity of an algorithm. Another way to think of it is the intersection of Big-O and Big-Omega, or more simply runtime is guaranteed to be a given complexity, such as n log n.

Memory structure for a program

Once you execute a program, the program is loaded into the memory. Then processor read data and commands in memory to operate. The memory allocated during this process has the following structure.

- Code: For code (execution commands). The memory size is fixed at compile. The memory is allocated (released) at the beginning (end) of the program.

- Data: For global, static variables. The memory size is fixed at compile. The memory is allocated (released) at the beginning (end) of the program.

- Heap: For dynamic memory allocation. The memory size changes over runtime.

- Stack: For local variables and parameters. The memory size is fixed at compile. The memory is allocated (released) when a function is called.

Where the choice of a data structure makes a difference?

- Memory size

- Speed at creation

- Speed of insertion/deletion of a node

- Speed of search